Was really excited for the next gen update to play the new improved Witcher 3 for the third time on my badass new pc(Ryzen 9 5900x + 32 GB RAM + RTX 3070).

I though there is no way in hell this release is gonna not work flawlessly after Cyberpunk 2077 fiasco. CDPR sure have learned their lesson.

But to my surprise that is not the case.

This release is rushed again and off course not a good one.

It seems this days game releases are indeed a joke. It doesn't matter one has a 3 thousand dollar gaming pc. One cannot play something when that thing is released.

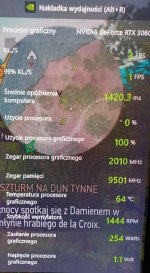

I get ~10 FPS in the game 4k DLSS Performance, RT on(except RT shadow which is off)with a RTX 3070. Hairworks off. Rest of the graphical settings on Ultra(not Ultra +) except last two which are on Ultra+.

I get weird artifacts on the screen. Image not on all full screen but kind of cropped.

Q: Anyone got or knows of a fix, workaround?

I though there is no way in hell this release is gonna not work flawlessly after Cyberpunk 2077 fiasco. CDPR sure have learned their lesson.

But to my surprise that is not the case.

This release is rushed again and off course not a good one.

It seems this days game releases are indeed a joke. It doesn't matter one has a 3 thousand dollar gaming pc. One cannot play something when that thing is released.

I get ~10 FPS in the game 4k DLSS Performance, RT on(except RT shadow which is off)with a RTX 3070. Hairworks off. Rest of the graphical settings on Ultra(not Ultra +) except last two which are on Ultra+.

I get weird artifacts on the screen. Image not on all full screen but kind of cropped.

Q: Anyone got or knows of a fix, workaround?

Attachments

Last edited: