Hello,

I seem to be be only getting just above 60fps no matter what the settings are set to in game. I can have them set to Ultra and get anywhere from 59 - 65 fps or even have them on low and get the same performance. I don't believe this is a Vsync issue although I could be wrong, but I would think that if it was Vsync related then I would not go over the 60fps mark at all. My system specs are below, let me know if anyone has any suggestions.

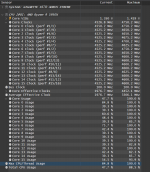

I've noticed CPU utilization is only around 20-30 percent and GPU utilization is anywhere between 50 - 80 percent. (all seems a bit low to me)

System Specs:

GPU: 3090 strix CPU: AMD 5950x RAM: 32Gb 3200Mhz DDR4

Also something to note that I'm running the game on at 1440p 144hz and my monitor is a Dell S2716DG.

Something else of note, I've tried completely uninstalling and reinstalling video drivers to see if that would make a difference, it did not.

Thanks!

I seem to be be only getting just above 60fps no matter what the settings are set to in game. I can have them set to Ultra and get anywhere from 59 - 65 fps or even have them on low and get the same performance. I don't believe this is a Vsync issue although I could be wrong, but I would think that if it was Vsync related then I would not go over the 60fps mark at all. My system specs are below, let me know if anyone has any suggestions.

I've noticed CPU utilization is only around 20-30 percent and GPU utilization is anywhere between 50 - 80 percent. (all seems a bit low to me)

System Specs:

GPU: 3090 strix CPU: AMD 5950x RAM: 32Gb 3200Mhz DDR4

Also something to note that I'm running the game on at 1440p 144hz and my monitor is a Dell S2716DG.

Something else of note, I've tried completely uninstalling and reinstalling video drivers to see if that would make a difference, it did not.

Thanks!