You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

You have to optimize to some standard, otherwise it's not optimization.

Most PCs and consoles use NVidia based GPUs, it only makes sense to optimize for the most common type of GPU.

This does not mean it will only run on NVidia GPUs, only that it runs best on them.

I mean the PS4 and Xbox use the same AMD GPU. as will their next gen consoles.

that aside, no it won't only work on Nvidia cards, not sure that is even possible. also games never show the kind of bias to one or the other the way synthetic benchmarks do.

TW 3 worked much better on Nvidia, and we have Raytracing which is currently exclusive for RTX family.

and only used by less than 1% of people that have gaming PCs. it will not be optomised for this to the extent that the performance on anything else would be unacceptable. Witcher 3 wasn't, no idea why any one would think this would be.

Learn something new every day,I mean the PS4 and Xbox use the same AMD GPU. as will their next gen consoles.

Thanks!

You have to optimize to some standard, otherwise it's not optimization.

Most PCs use NVidia based GPUs, it only makes sense to optimize for the most common type of GPU.

This does not mean it will only run on NVidia GPUs, only that it runs best on them.

This is so not true!

and only used by less than 1% of people that have gaming PCs. it will not be optomised for this to the extent that the performance on anything else would be unacceptable. Witcher 3 wasn't, no idea why any one would think this would be.

The game should of course run fine on most modern gaming PCs. The fact that the game releases on current gen consoles makes this pretty obvious.

Still I believe Nvidia will try to optimize the heck out of Cyberpunk together with CDPR. Especially regarding Raytracing.

If this game ends up running reasonably well with Raytracing enabled on the mid to low tier RTX cards this would be a huge success for Nvidia.

The game should of course run fine on most modern gaming PCs. The fact that the game releases on current gen consoles makes this pretty obvious.

Still I believe Nvidia will try to optimize the heck out of Cyberpunk together with CDPR. Especially regarding Raytracing.

If this game ends up running reasonably well with Raytracing enabled on the mid to low tier RTX cards this would be a huge success for Nvidia.

They will both want the Ray tracing implementation to run really well, and probably have the time to do that. hell the Next gen consoles are going to have RT from AMD, so by the time this game comes out the AMD version should be just around the corner if not already announced.

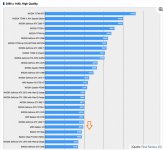

I have recently heard Final Fantasy 15 is optimize for NVidia. Found benchmarks with new AMD Navi GPU generation. This is how it looks when a game is optimized only for NVidia GPU's..

Are you doing the same with Cyberpunk 2077 ?

Nvidia is more or less standard even non nvidia cards run more or less the same will see at most 3% drop off

CDPR has always been close with Nvidia, and optimized for their GPUs first. You'll notice that their games tend to have Nvidia-specific graphical features on PC, such as Hairworks.

It's unfortunate, and perhaps it will change in the future, but I would expect the same with 2077. That said, I personally stand to gain because I'm an Nvidia user myself.

It's unfortunate, and perhaps it will change in the future, but I would expect the same with 2077. That said, I personally stand to gain because I'm an Nvidia user myself.

To have Nvidia specific graphical features doesn't mean that the game is optimized for Nvidia. Btw, if you see that certain game works better on Nvidia, that means that they did it deliberately.

And stop call it raytracing because RTX does maybe 0.1% of what raytracing really is. Nvidia RTX is a fraud, and it's here to rob you.

Consoles are standard today unfortunately, not Nvidia or AMD. But consoles have AMD hardware, and this tells you something.

And stop call it raytracing because RTX does maybe 0.1% of what raytracing really is. Nvidia RTX is a fraud, and it's here to rob you.

Consoles are standard today unfortunately, not Nvidia or AMD. But consoles have AMD hardware, and this tells you something.

But consoles have AMD hardware, and this tells you something.

That AMD cost less?

Consoles have AMD hardware due to business agreements and contractsBut consoles have AMD hardware, and this tells you something.

Well from what I've noticed, most of the times, when game is... supported from NVIDIA or AMD those graphics from that manufacturer have better performance than probably their... equivalent from the other manufacturer. Plus there are some special features from that manufacturer.

It's just slightly bit better optimized and uses features from that manufacturer. NVIDIA Hair Works, PhysX or AMD's TressFX and more.

It's just slightly bit better optimized and uses features from that manufacturer. NVIDIA Hair Works, PhysX or AMD's TressFX and more.

That AMD can't compete on PC GPU market so they at least make good CPUs and GPUs for consoles?But consoles have AMD hardware, and this tells you something.

That AMD cost less?

mostly that AMD can produce both CPU and GPU hardware, not the best of either, but no extra parties to deal with.

Unbelievable!?

All today's games are primarily made for consoles, and consoles have AMD hardware. So how can you say that games are optimized for Nvidia?

Consoles are standard, and that's why graphics is stagnating. Publishers don't want their game graphically to be much different between PC and consoles, even though PC hardware is so much more powerful

Nvidia has stronger hardware, hence better performance, but it's also 2 times more expensive. That's wrong.

All today's games are primarily made for consoles, and consoles have AMD hardware. So how can you say that games are optimized for Nvidia?

Consoles are standard, and that's why graphics is stagnating. Publishers don't want their game graphically to be much different between PC and consoles, even though PC hardware is so much more powerful

Nvidia has stronger hardware, hence better performance, but it's also 2 times more expensive. That's wrong.

that's not even strictly true. lots of games optimized for certain hardware will get hit with driver issues and make that moot.This does not mean it will only run on NVidia GPUs, only that it runs best on them.

anyone with an AMD card is going to be fine.

Post automatically merged:

because PC tuning and development isn't being done in an xbox or PS environment anyways so its irrelevant.All today's games are primarily made for consoles, and consoles have AMD hardware. So how can you say that games are optimized for Nvidia?

Consoles are standard, and that's why graphics is stagnating. Publishers don't want their game graphically to be much different between PC and consoles

this isn't even remotely true when the port isn't just a lazy copy and paste.

graphics aren't stagnating. you're just getting slower progress because the next jumps require so much more horsepower for minimal improvements.

Nvidia has stronger hardware, hence better performance, but it's also 2 times more expensive. That's wrong.

you cant point to the RTX 2080 and say "2 times more expensive) when there's no comparable card in AMD's range yet. get out with the hyperbole. the top of the line non ray tracing card is not 2 times more expensive than AMD's flagship.

that's not even strictly true. lots of games optimized for certain hardware will get hit with driver issues and make that moot.

anyone with an AMD card is going to be fine.

Post automatically merged:

because PC tuning and development isn't being done in an xbox or PS environment anyways so its irrelevant.

this isn't even remotely true when the port isn't just a lazy copy and paste.

graphics aren't stagnating. you're just getting slower progress because the next jumps require so much more horsepower for minimal improvements.

you cant point to the RTX 2080 and say "2 times more expensive) when there's no comparable card in AMD's range yet. get out with the hyperbole. the top of the line non ray tracing card is not 2 times more expensive than AMD's flagship.

LOL!

All games including console games are made on a PC, and then ported to the consoles through specific dev kits. Game developing in the console environment does not exist. What exists is game developing for the console environment.

But the problem is that many ports are product of laziness. And i'ts not only graphics, but the gameplay elements which must be developed for the consoles, gamepads for example.

Again, graphics are stagnating only because of the consoles, and that's a fact. This is why people today can play these multiplatform games in 2k or 4k, which was not even close to possible when games were PC exclusives. The best example for this is 2007 Crysis.

I can point to the RTX 2080 because in 2009 i have paid 450 euros for the fastest Nvidia graphic card, which was the fastest graphic card back then. Now the fastest card cost 1300 euros and above.

im obviously not talking about developing IN the console. I wasnt aware that needed to be spelled out.LOL!

All games including console games are made on a PC, and then ported to the consoles through specific dev kits. Game developing in the console environment does not exist. What exists is game developing for the console environment.

yes, they're made on a PC, but the dev kit is the "console environment" im talking about. its not really a port to work off of the dev kits. there's a reason they're PC ports and not the other way around.

But the problem is that many ports are product of laziness. And i'ts not only graphics, but the gameplay elements which must be developed for the consoles, gamepads for example.

which goes back to the point, they're developed in a different process than PC games, because of the enivornment they're working in. lazy ports just take that code and make it work on PC rather than having separate development. there's a reason games like Metro and TW3 pushed graphical boundaries on PC and games like Darksiders didnt even have graphics options beyond resolution. Consoles certainly didnt stop Metro from tossing on heaps of tesselation in Last Light when the tech was new and they didnt stop TW3 from trying to implement hairworks, etc.

you're right on controls, the amount of buttons on a gamepad limits things, but really, outside of sims, this doesnt matter at all. there really isnt a need for more functions than that. and frankly, i dont want to play a game where i need 50 key bindings to cover all my bases.

Again, graphics are stagnating only because of the consoles, and that's a fact. This is why people today can play these multiplatform games in 2k or 4k, which was not even close to possible when games were PC exclusives.

those resolutions werent even allowed for in any games until the past few years. you're forgetting how new ultra HD resolutions are. its only just now become mainstream. pc exclusive games werent even developing with them in mind until recently because the tech wasnt there yet.

the biggest limiting factor on that was VRAM anyways, consoles absolutely did NOT stop GPU makers from shoving more VRAM in. once higher resolutions started becoming a thing, you saw how quickly the GPU makers started boosting VRAM. everything was 2-4 gigs for a long time and we shot up to 12 real fast. to act like consoles were the limiting factor is so misguided. you have to remember that the GPU makes are always pushing tech and they do a lot more than just consumer graphics cards. tech has come a LONG way since the Xbone and PS4 launched. Consoles havent held back much, its just the uneven way in which the tech has developed. to blame consoles for tech stagnation when devs were still figuring out how to use the ever expanding mass of CPU threads they had and all the new features each subsequent API came out with is just not fair at all.

do you even play console games often? putting th ePS4 versions side by side with the PC version is a stark differnce in many cases.

thats a terrible example. Crysis was so terribly optimized. you couldnt run it at anything because they never optimized it properly. Crysis Warhead took most of the same tech and made it run twice as smoothly in like a years time.The best example for this is 2007 Crysis.

Crysis wasnt a good example of games pushing boundaries in a healthy way, it was a great case of devs way overestimating their ability to optimize features and completely overestimating what the current hardware was capable of. they didnt succeed in pushing graphics so much as they flew too close to the sun and managed to turn it in to a marketing point. they dumbed their own engine down before it ever touched consoles because even they knew they went too far.

I can point to the RTX 2080 because in 2009 i have paid 450 euros for the fastest Nvidia graphic card, which was the fastest graphic card back then. Now the fastest card cost 1300 euros and above.

in 2009, all the cards were operating on the same playing field though. this is new tech that AMD doesnt have out yet. you cant compare something entirely different to AMD's stuff. it makes far more sense to compare a 1080 ti. wait til AMD has a ray tracing card to compare the 2080.

this is like comparing the price of an AGP card to when PCIe was brand new tech. this is just the price you pay to be an early adopter and isnt a really relevant comparison to match price points.