HI, I', trying to get my PC ready for the DLC. I did notice that the requirements went up.

Would it be best to just wait until it is out, and then look at benchmarks? Probably, I think.

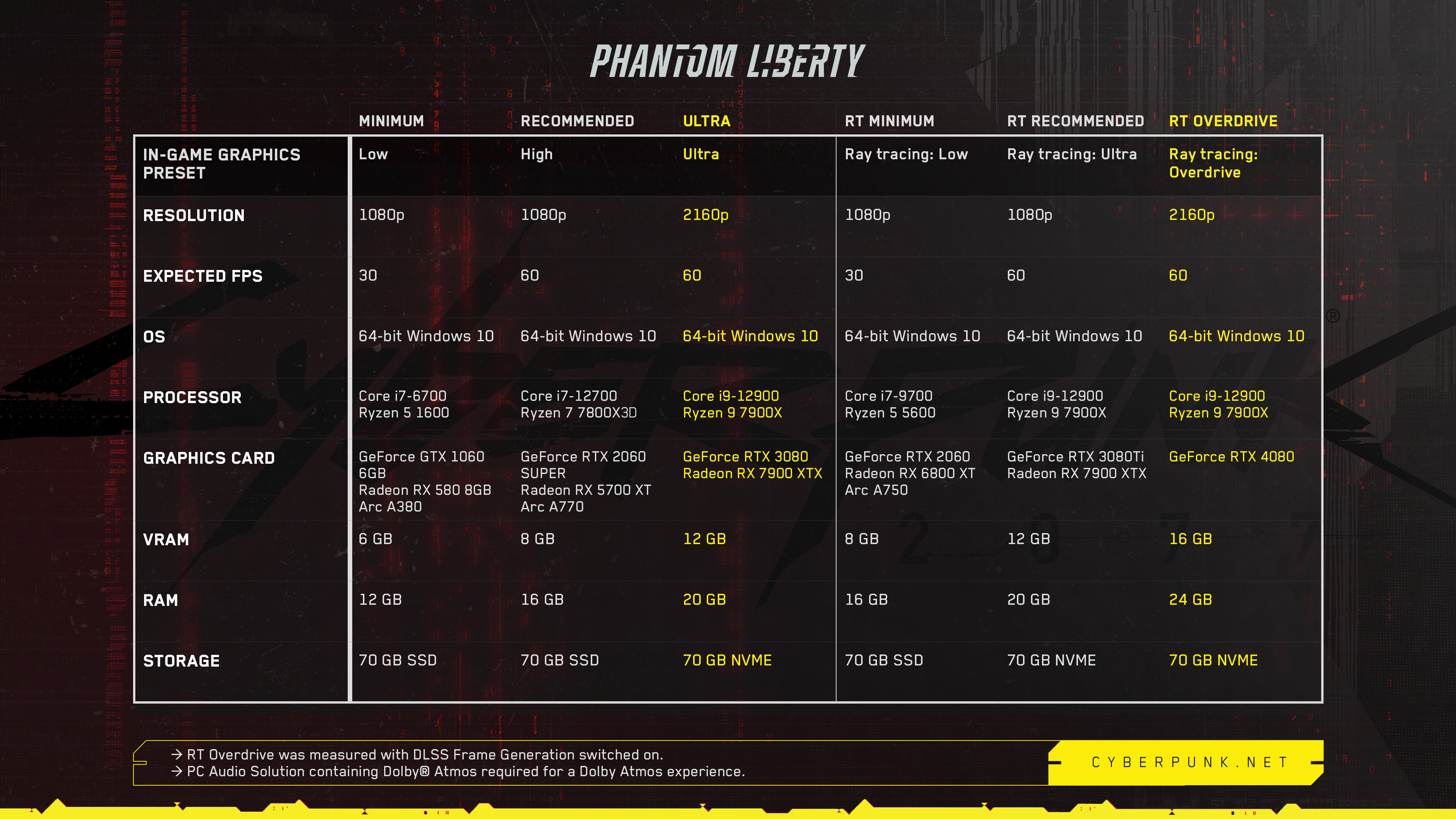

Right now, I have a 12700K and the RTX 3070. I have a 1080p screen and a 4k screen but I have played CP on 1080p so far, RT Ultra. Playing in 4k would be nice.

I'm thinking it would be good to get a RTX 4080, no? But will it be enough, or do I need a better CPU as well? Possibly wait for 14700K? Or does AMD have good CPUs as well right now?

Would it be best to just wait until it is out, and then look at benchmarks? Probably, I think.

Right now, I have a 12700K and the RTX 3070. I have a 1080p screen and a 4k screen but I have played CP on 1080p so far, RT Ultra. Playing in 4k would be nice.

I'm thinking it would be good to get a RTX 4080, no? But will it be enough, or do I need a better CPU as well? Possibly wait for 14700K? Or does AMD have good CPUs as well right now?